It’s my great pleasure to announce that you can now access the Apache Flink web user interface through Decodable’s Custom Pipeline feature! In this blog post, I’ll show you how to use the Apache Flink web UI to monitor your custom pipelines and also go through some recent improvements to the Decodable SDK for Custom Pipelines.

The Apache Flink UI

Introduced earlier this year, Decodable Custom Pipelines (currently in Tech Preview) complement SQL-based pipelines by bringing you the full flexibility of the Flink APIs for implementing your stream processing use cases. This comes in handy for situations where SQL isn’t expressive enough, where you’d like to use a connector which isn’t available out-of-the-box in Decodable currently, or where you’d just like to migrate some existing Flink jobs to a managed execution environment, sparing you from operating your own Flink clusters.

No matter what’s the motivation for running custom Flink jobs, the web UI is an invaluable tool for interacting with your jobs, observing their status and health, examining logs and metrics, etc. Starting now, you can use the Apache Flink web UI to interact and monitor your custom pipelines.

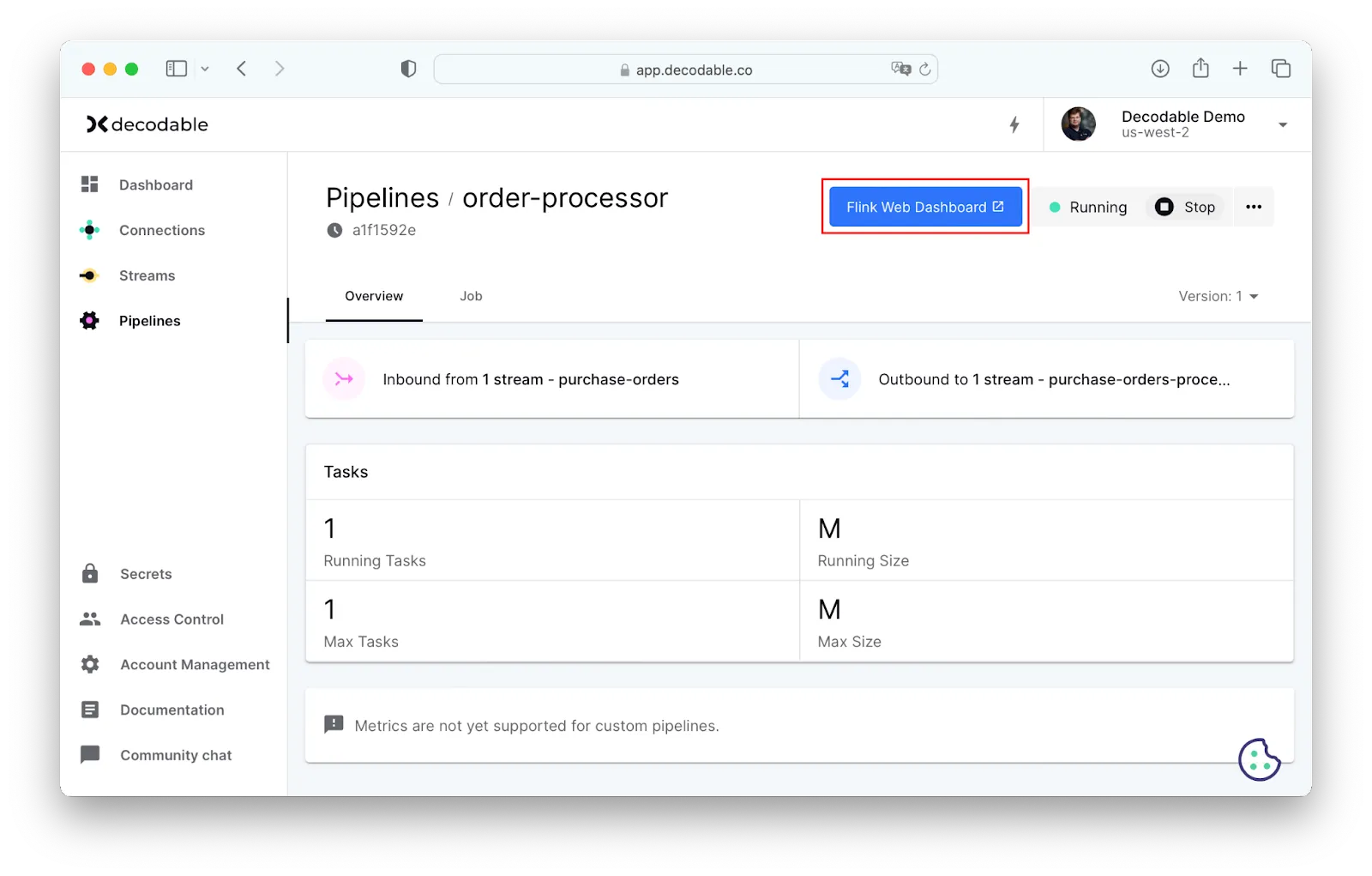

To open the Apache Flink web UI, do the following steps:

- From the Decodable web console, select Pipelines.

- Select a custom pipeline. The Overview page for that pipeline opens.

- Select Flink Web Dashboard. The Flink web UI opens in a new browser tab or window.

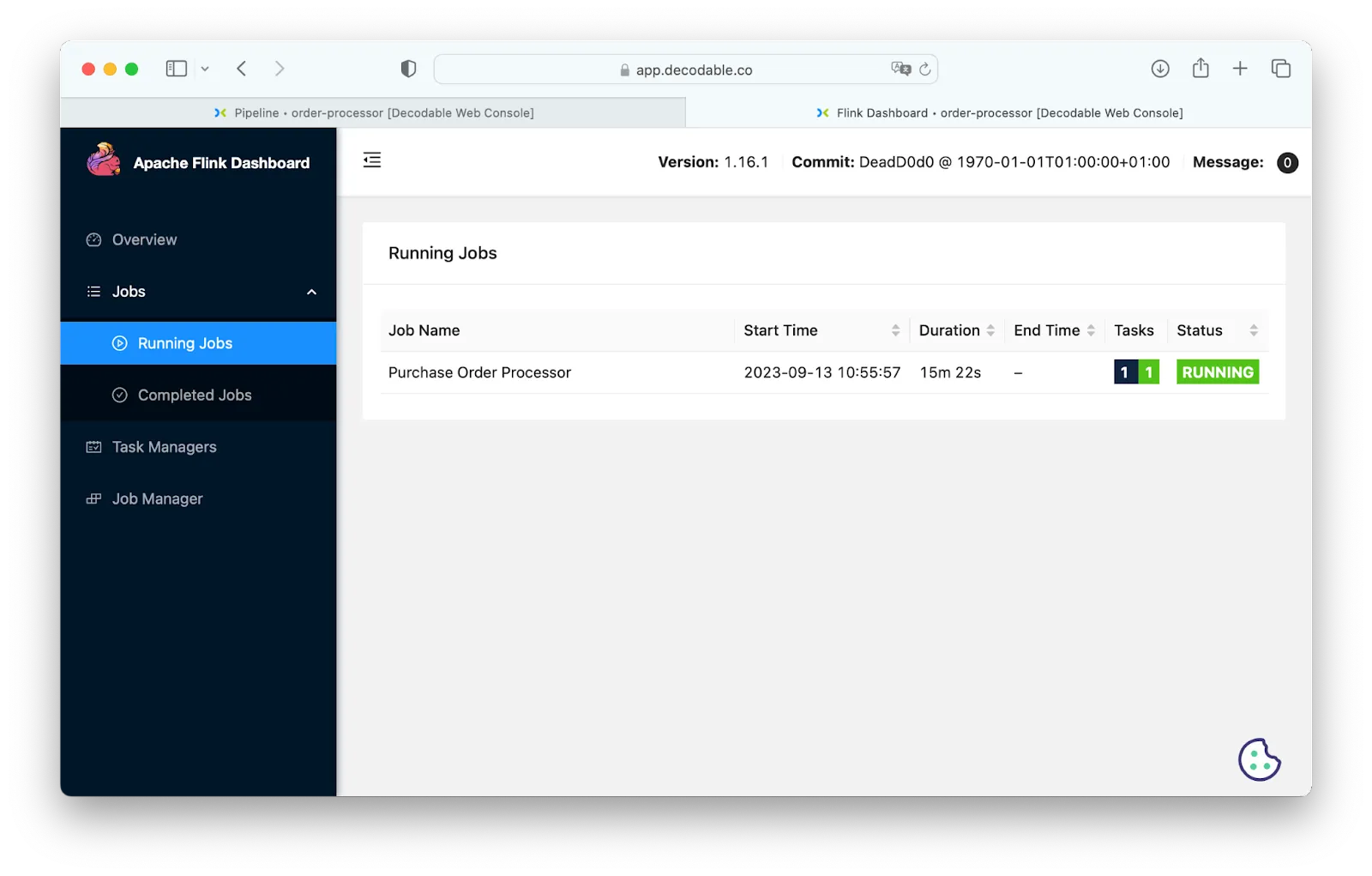

Within the Apache Flink web UI itself, you can perform common tasks like examining the history of checkpoints, monitoring for any potential backpressure (and then for instance use that information to resize the job), analyzing watermarks and task metrics, retrieving the job logs, and much more.

Adding support for the Apache Flink web UI moves Custom Pipelines to the next level, helping users feel confident about running their jobs in production. We can’t wait for you to give this a try and let us know about any feedback you may have!

Stream Metadata in the Custom Pipeline SDK

When adding support for deploying custom Flink jobs, one of the design goals was to provide a tight integration with the rest of the Decodable platform, such as SQL-based pipelines and fully-managed connectors. To that end, the open-source Decodable SDK for Custom Pipelines provides utilities like DecodableStreamSource and DecodableStreamSink, which allow you to consume data from and produce data to Decodable managed streams.

I am also excited to announce the Decodable SDK version 1.0.0.Beta2, which lets you annotate your jobs with metadata about the connected streams:

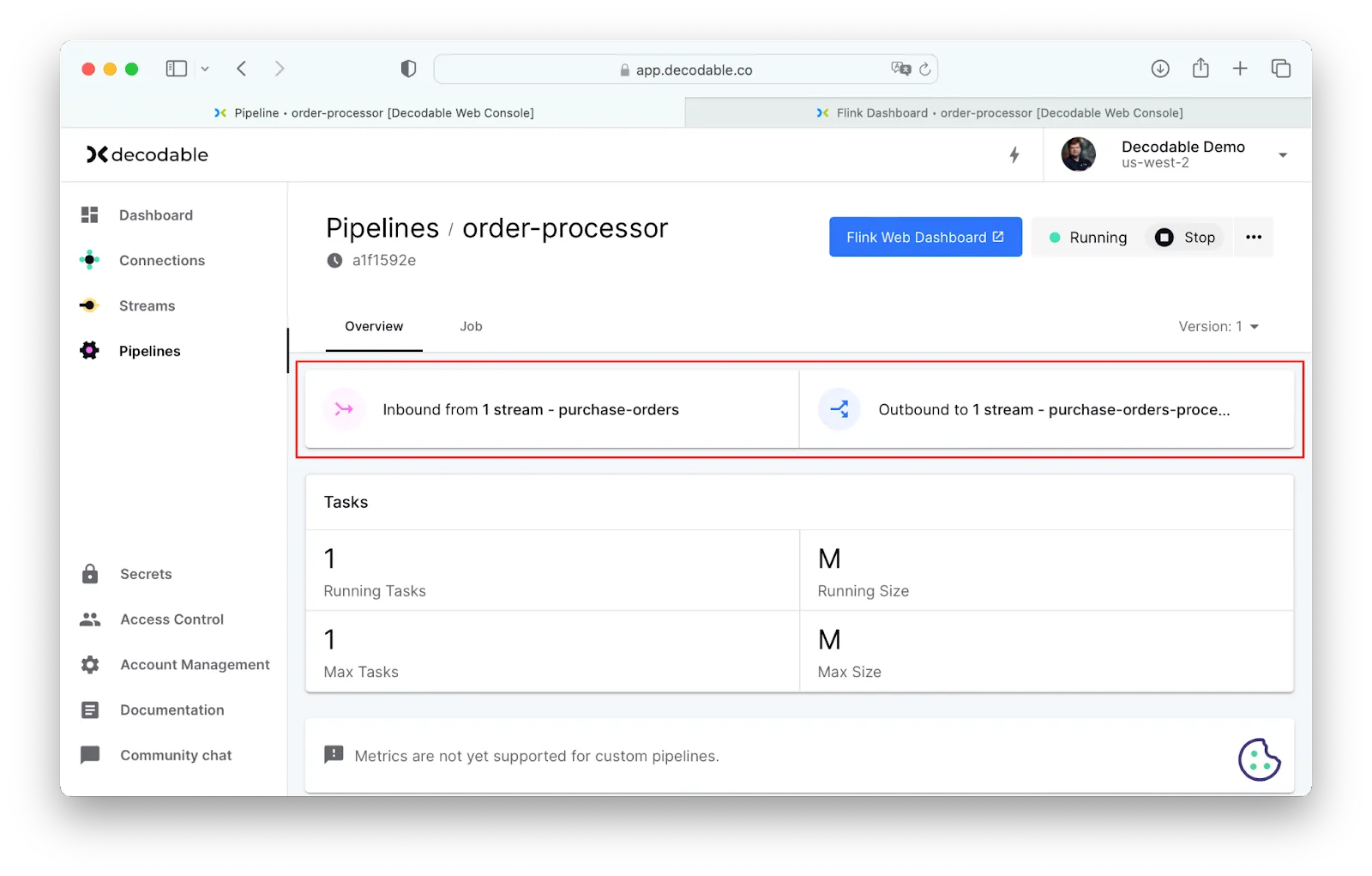

This metadata is used within the lineage bar of the Decodable console, allowing you to easily navigate between pipelines and the streams they read from and write to:

At this point, the SourceStreams and SinkStreams annotations can be used for providing the names of all referenced streams. In a future version of the SDK, this metadata will be extracted from the stream topology and propagated automatically to the backend, further simplifying the user experience.

Next Steps

If you haven’t done so yet, you can request access to the Custom Pipelines Tech Preview from the Decodable Web UI. Simply choose “Request Custom Pipelines Access” from the drop-down when creating a pipeline, which will initiate the process for bootstrapping all the required resources and making it available to you shortly thereafter.

In terms of the near-term roadmap for Custom Pipelines, we are planning to integrate Decodable managed secrets, allowing you to use secrets when, for example, configuring the connectors of a custom Flink job. We’re also working towards the exposure of custom job metrics to your Datadog instance (akin to what’s already supported for SQL pipelines), the ability to build custom jobs from source from a given git repository (rather than uploading JAR files), and much more.

Of course, we’d also be very happy to hear about your feature requests for running your custom Flink jobs on Decodable!

Additional Resources

- Have a question for Gunnar? Connect on Twitter or LinkedIn

- Ready to connect to a data stream and create a pipeline? Start free

- Take a guided tour with our Quickstart Guide

- Join our Slack community

.svg)